Keeping Pace with AI – Toward Deeper Visibility, Control & Safe Innovation

When my team introduced the AI category in our Secure Web Gateway (SWG) a year ago, it was already clear that artificial intelligence-driven tools were becoming impossible to ignore. We knew that organizations would want to see and manage them – that the “shadow AI” risk was real even then. In the months since we launched, however, the AI landscape has sped ahead in ways that demand a fresh response: new classes of services, autonomous agents, and integrations that blur the lines between tool and actor.

Today, we’re taking the next step: refining our AI taxonomy with two additional granular categories. And in the future, enhancing control options at both the URL filtering and CASB layers. The goal is to help organizations stay ahead of risk – without thwarting innovation.

From “Generic AI” to a More Nuanced Taxonomy

At launch, our Generic AI category captured a broad span of AI-powered sites: platforms, APIs, services, and applications that incorporate machine learning or AI (see my blog article on “Enabling the Secure Usage of AI Web Tools”). It was a necessary first step, giving organizations much-needed visibility into AI traffic in their environments.

But over the past year, the AI universe has evolved dramatically:

- Generative models are producing images, video, audio, and code, often with a single prompt.

- AI agents are becoming “actors” in their own right, autonomously interfacing with APIs, third-party services, or internal corporate systems.

- Standards and protocols like Model Context Protocol (MCP) are enabling AI systems to tap into corporate data sources in real time, further blurring boundaries between AI tool and data pipeline.

Because of this diversity, treating all AI traffic under a monolithic “Generic AI” label is no longer sufficient. A one-size-fits-all category obscures critical distinctions in risk, usage patterns, and necessary controls.

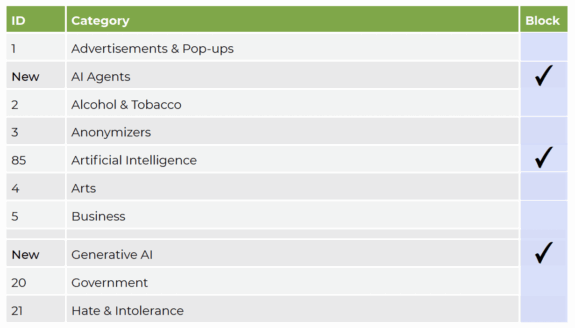

To address this, we now support two subcategories, in addition to the original:

- Generative AI: Platforms and services that use AI technology to generate content such as text, images and code, based on user prompts. (Think ChatGPT, Gemini, Claude, image or video synthesis services, code generation tools, and so on.)

- AI Agents: Autonomous systems or services that not only compute responses, but interact with their environment: connect to APIs, make decisions, trigger actions, and interface with external systems or corporate data sources. This category includes MCP servers, autonomous assistants, or agent orchestration frameworks. It also includes websites that provide or list AI agents.

By splitting Generic AI into these more specific buckets, we can help organizations see not just that AI is being used, but how it is being used.

Why These Distinctions Matter

1. Expanded Attack Surface & Invisible Connections

Generative AI is powerful, but relatively well understood in risk models, namely data leakage via prompts, improper handling of intellectual property, prompt injection, and the like. Those are risks I discussed in my earlier blog, and they remain very real.

AI Agents, however, introduce a new class of risk. Because they act, not just respond, they can initiate connections to third-party APIs, internal databases, and cloud services – sometimes invisibly. Such interactions might bypass existing controls, opening stealth channels for data exfiltration, lateral movement, or unsanctioned automation.

This agentic capacity means your security perimeter must evolve. It’s no longer just you being aware of AI sites, but keeping a pragmatic eye on the agent embedded in your workflows.

2. Policy Granularity & Risk Tiering

By distinguishing between Generic AI, Generative AI, and AI Agents, organizations can apply risk-based policies:

- Users handling low-sensitivity data may be granted broad generative AI access.

- For more sensitive groups or contexts, interactions via AI Agents may be restricted or closely monitored.

- Some systems may allow generative AI in a “warn first” mode, giving users awareness before they proceed, rather than an outright block.

These distinctions extend into reporting and analytics, making it possible for IT and security teams to answer questions such as “Which departments are invoking AI agents?” and “Which AI tools are actively in use?”

Enhancing URL Filter Controls: Allow, Block, and Warn

In our first rollout, we used a simple allow/deny model for the AI category. That was necessary to integrate the new category without disrupting operational workflows. We defaulted AI to “allowed,” except in customers’ existing deny-all policies, and we coordinated with Technical Account Managers to set expectations and avoid surprises.

Now we’re introducing a third policy action: Warn. This is how it fits in:

- Allow: Transparently let access proceed.

- Block: Prevent access entirely.

- Warn: Display a user-facing message that this site may involve data risk, forcing explicit acknowledgment.

This intermediate step gives organizations a soft control lever: visibility and user education without blunt interruptions. It supports a gradual, controlled approach to tighter AI governance.

These policy actions are available across all AI categories (Generic, Generative, Agent). Because each subcategory carries a different risk profile, admins can tailor default actions appropriately.

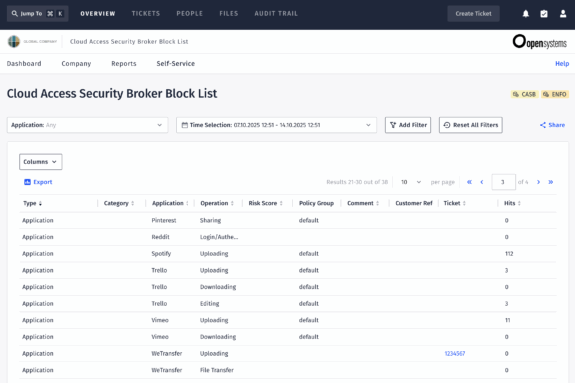

Deepening Control with CASB Integration

URL filtering is powerful, but once a user reaches an AI site, you may want to control how they use it. That’s where our Cloud Access Security Broker (CASB) plays a vital role.

By mapping these same AI categories into the CASB layer, organizations can:

- Discover shadow AI usage, i.e. services used outside the approved SaaS inventory.

- Enforce fine-grained policies on user actions, for example no uploads and no downloads, for generative AI usage.

- Or in general, use CASB functionality like data loss prevention (DLP) to block sensitive content from being sent into AI prompts or detecting and controlling agentic behavior.

In short, CASB enables not just access control, but interaction control – a more advanced safeguard in a complex AI ecosystem.

Real Benefits for Security Teams & Users

By adopting this refined AI taxonomy and layered enforcement, organizations gain:

- Clearer analytics and reporting: It is now possible to see how much traffic is generative vs. agentic AI, and which tools are being used.

- Better risk differentiation: As not all AI tools pose the same levels of risk, organizations can apply stricter policies where appropriate.

- User awareness and education: The Warn action alerts users to potential data risks and encourages them to review sensitive content before sharing.

- Stronger data protection: Agent channels can be locked down or limited, reducing exfiltration vectors.

- Support for safer use of trusted tools: Organizations can allow generative AI where it is truly useful, while constraining agentic access to only vetted services.

Ultimately, this layered approach provides security teams with both an overview and insights into emerging generative AI and agentic AI usage, while enabling users to leverage innovative tools more safely.

Supporting Safe Innovation

AI is not static. Just as we saw new platforms and services emerge over the past year, we expect new agentic systems, hybrid models, and protocol innovations on the horizon.

We are committed to continuously refining our URL Filtering and CASB capabilities. Our aim: to help customers protect users, data, and productivity – without slowing innovation.

Because in the world of AI, it really matters to be the most secure, transparent, and nimble enabler of what’s next.

Leave Complexity

Behind

To learn how Open Systems SASE Experience can benefit your organization, talk to a specialist today.

Contact Us

Dimitra Azariadi, Product Owner | Web Security

Dimitra Azariadi, Product Owner | Web Security