Automating Vulnerability Management for Safer Releases

What is Vulnerability Management?

Vulnerability management is the continuous cycle of identifying, assessing, prioritizing, and remediating coding flaws, no matter if they originate in third-party libraries or our own code.

Modern software is built from multiple open-source and commercial components; each one can (and potentially will) expose a flaw. Our job is to find those vulnerabilities before an attacker does – and to guide engineers toward secure fixes without slowing them down.

Speaking from practical experience, it has become blatantly clear that vulnerability management doesn’t work as a standalone process. That’s why we follow a DevSecOps approach which integrates security practices directly into the software development lifecycle. That way, we achieve early detection and remediation of vulnerabilities.

Figure 1: DevSecOps approach to Vulnerability Management.

Open Systems delivers its security services by integrating different software components end to end:

- Up‑stream open‑source projects

- Original equipment manufacturer (OEM) and commercial components that come with their own patch cadence

- Thousands of lines of home‑grown code we write every day to glue everything together and add unique value

Because the attack surface spans code, dependencies, and infrastructure, our vulnerability management process uses two complementary modes: proactive and reactive.

Figure 2: Proactive mode prevents introduction of known vulnerabilities, while reactive mode focuses on mitigating newly discovered vulnerable dependencies.

Together, these modes ensure a resilient, responsive approach to securing our environment:

- The proactive mode focuses on preventing security flaws from being introduced in the first place. This includes continuous dependency and source code analysis (SCA & SAST) integrated into every pull request, reporting findings and providing remediation suggestions, and security architecture reviews ahead of new implementations or major changes.

- In contrast, the reactive mode is designed to respond swiftly when new external threats emerge. This involves around-the-clock monitoring for common vulnerabilities and exposures (CVE). It also involves maintaining an up-to-date inventory of our entire software and infrastructure fleet, automated emergency patching pipelines, and compensating controls coordinated by our Operations Center – Mission Control.

In practice, this means we continuously identify, assess, prioritize, and mitigate vulnerabilities – regardless of whether they originate in open-source libraries, commercial tools, or our own codebase. The result is a unified, repeatable workflow that protects both what we create and what we integrate – keeping customers secure before, during, and after unprecedented attacks.

Why we chose to change

A week before Christmas 2021, the development managers received an internal email asking: “Are we affected by Log4Shell?”

It seemed like a straightforward question, but it was not so simple to answer.

Log4Shell was a vulnerability present in a common Java logging library called Log4J and had a CVSS score of 10.0 – the highest possible rating. It affected a wide range of systems, from enterprise-grade cloud applications to consumer internet of things (IoT) devices. It is still considered one of the most severe vulnerabilities ever discovered.

It was challenging to determine if an application was affected by Log4Shell because the vulnerable library could be present as a deeply nested transitive dependency, making it hard to detect through direct inspection.

Manually hunting through every repository for a specific library version or for a potential usage of Log4J stole precious hours, raised our stress levels, and underlined a hard truth: we couldn’t stay blind to the next zero-day attack. We needed real-time insight and repeatable processes – not heroic spreadsheets and double espressos. Being highly interested in security, I knew then that I could make a significant contribution to better vulnerability management going forward.

Laying the foundation: OWASP SAMM and open source

Instead of inventing a new framework completely from scratch, we adopted the OWASP Software Assurance Maturity Model (SAMM) as our roadmap and benchmark. SAMM sets clear, measurable practices that slot neatly into a DevSecOps pipeline. Starting from that community standard let us focus on execution rather than theory – and it ensured our methods remain transparent and auditable.

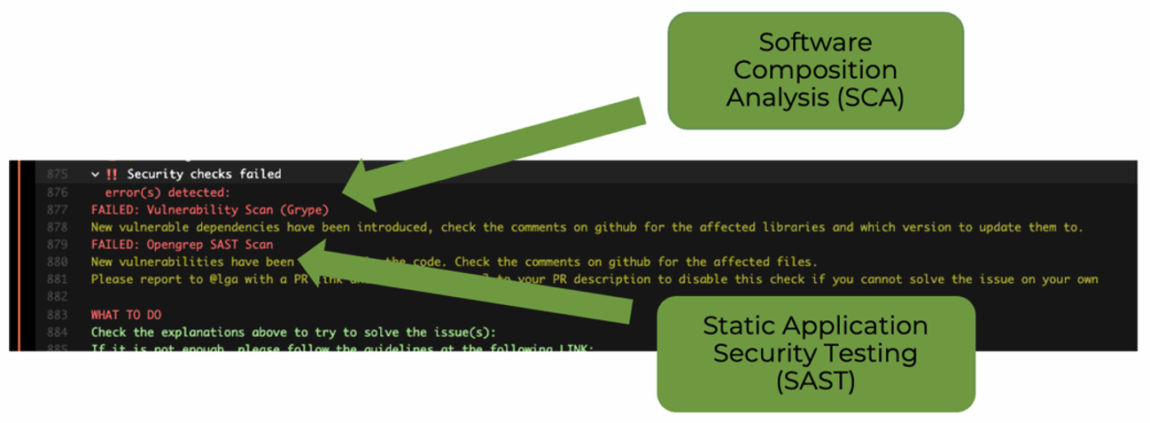

On the tooling side, we ran an open evaluation of commercial and open-source scanners for:

- Software Composition Analysis (SCA), which finds vulnerable dependencies

- Static Application Security Testing (SAST), which flags insecure code patterns

Open-source options met our accuracy and performance targets, avoided vendor lock-in, and let us contribute fixes upstream – so we embraced them.

Figure 3: SCA and SAST.

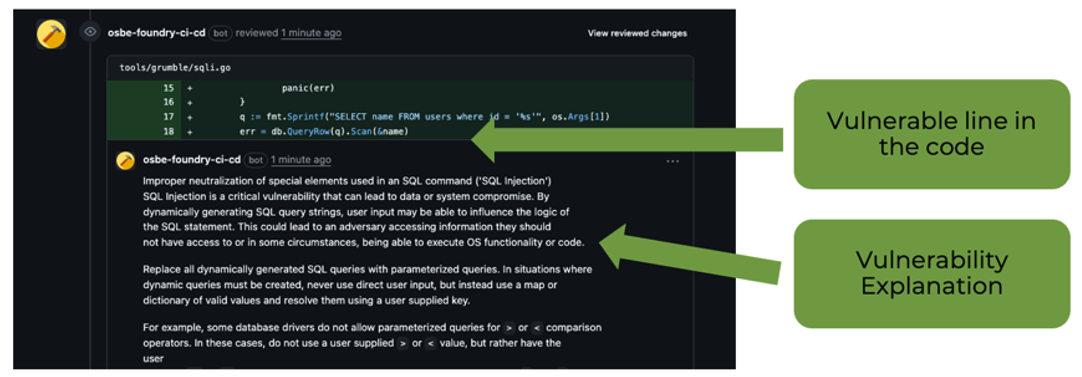

Shifting checks left – seamlessly

Developers shouldn’t have to log in to yet another dashboard. We integrated several scanners directly into GitHub pull-request reviews. The bots annotate code just like a human reviewer, highlighting:

- The vulnerable package or API

- CVSS severity and links to advisories

- Concrete remediation tips (upgrade path, safer API, etc.)

- Secrets that should not be committed to the codebase

A pull request can’t be merged while a Critical issue is open, thus guaranteeing that new flaws never reach productive code (main). For existing code, a scheduled scan refreshes our results every two hours, notifies the affected teams about new findings with instant messaging alerts, and creates maintenance tickets, complete with SLAs tied to severity.

Figure 4: Flagging vulnerable lines in the code.

“I feel safer when I code. The bot catches issues early, so I can focus on features and still improve as a developer.”

– Security Engineer

The tricky part: Secure Implementation with a focus on Developer Experience

Security tooling can feel like a speed bump or a “sleeping policeman”. Our biggest challenge was to show immediate value so that adoption was voluntary: instant feedback, zero context-switching, and the freedom for teams to fine-tune or extend rulesets themselves. Security was integrated in the same processes and tools that developers were already using in their daily tasks. Training sessions and quick-start docs turned wary engineers into security champions.

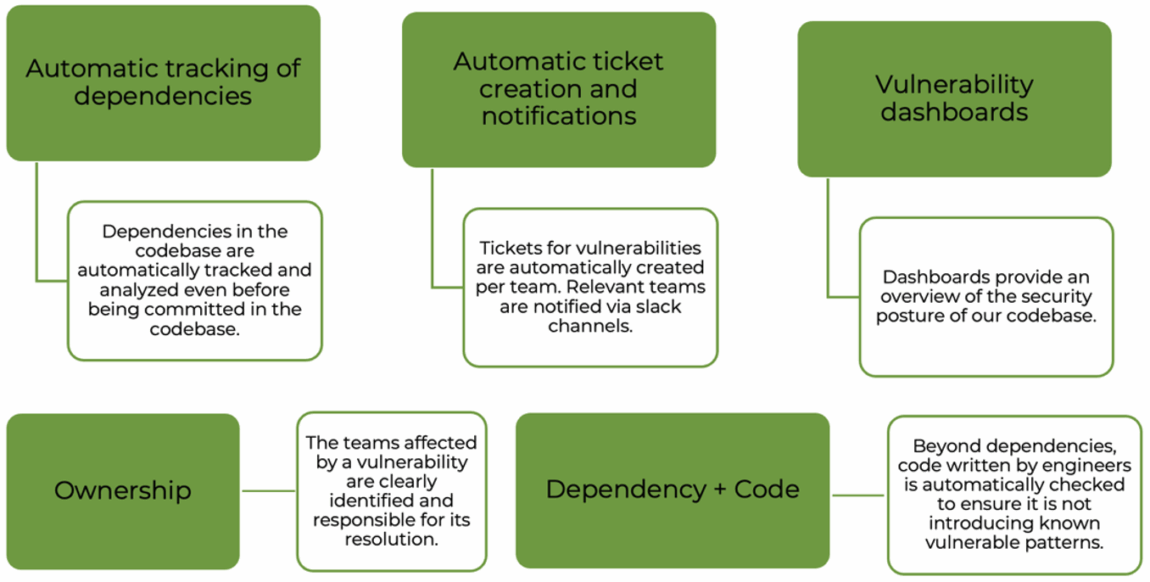

Each team now has assigned engineers that are responsible for identification, assessment and mitigation of vulnerabilities. Vulnerability managers use multiple approaches to identify flaws in Open Systems services:

- Integrated Vulnerability Scanner Solution

- Vendor Notifications

- Additional Sources:

- Automated code analysis

- Internal or external security audits

- On request from a customer in Mission Control

And one more thing: Even if two vulnerabilities have the same criticality, this doesn’t mean they are equal. That’s why Critical and High vulnerabilities are assessed with priority to identify if urgent mitigation is required as well as the implementation of a fix with timing and urgency.

Figure 5: Overview of Vulnerability Management at Open Systems.

Where customers benefit

During a recent on-site visit, a customer told us,

“I wish our developers could see how vulnerability management should be done.”

That’s an endorsement we’re proud of.

Having said that, we can’t rest on our laurels. We are continuously improving our codebase and learning from the threat landscape as it changes. With a working vulnerability management process in place, not only the engineers but primarily our customers benefit from:

- Consistency at scale: One code repository + a dedicated security pipeline means every product, old or new, follows the same rigorous checks. There are no forgotten corner cases.

- Speed: Automated discovery plus ready-made project management tasks cut median remediation time from days to hours.

- Support by Mission Control: When a critical CVE drops, our Operations Center can coordinate emergency patch windows to meet special customer environment requirements or immediately deploy compensating controls (firewall rules, NDR signatures) while Engineering prepares the permanent patch.

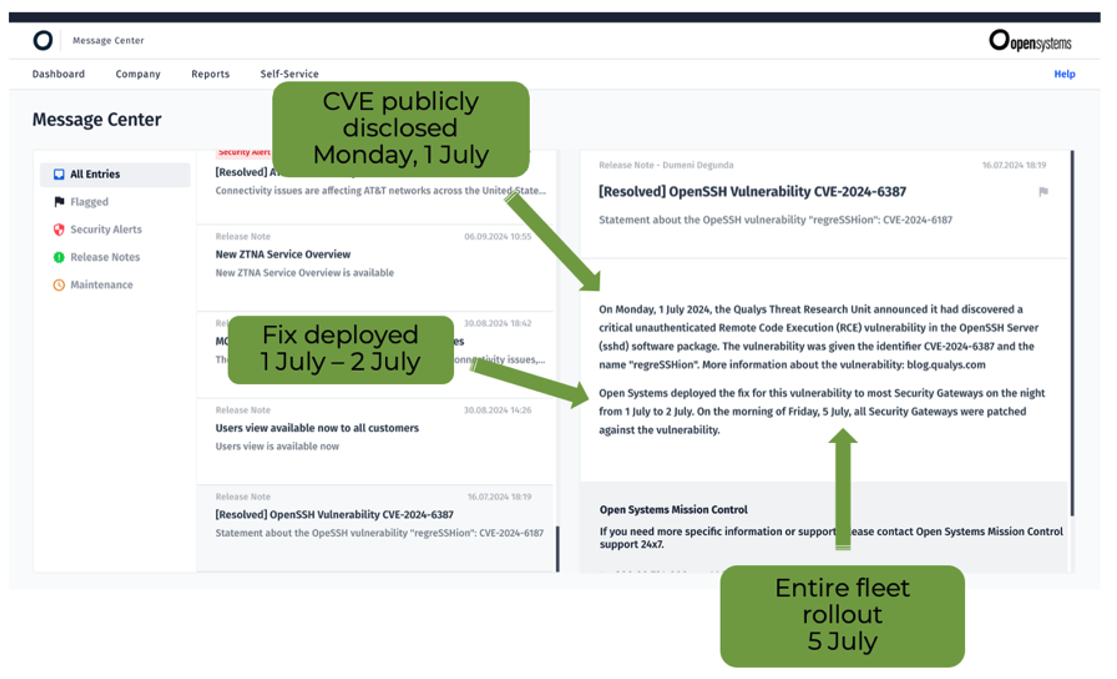

A real-world example: OpenSSH Vulnerability CVE-2024-6387

For this vulnerability, Mission Control coordinated customer maintenance windows, patched devices and rebooted them with zero unplanned downtime.

Figure 6: Example of Mission Control handling a vulnerability.

Looking ahead

Vulnerability management will never be “done”, but with a SAMM-aligned process, community-driven tools, and a culture of shared responsibility across development, operations, and security teams, we’re ready for whatever the next zero-day throws at us. And so are our customers.

Are you curious how this could strengthen your own security posture?

Leave Complexity

Behind

To learn how Open Systems SASE Experience can benefit your organization, talk to a specialist today.

Contact Us

Luca Galli, Senior Appsec Engineer

Luca Galli, Senior Appsec Engineer